When the Exchange Matters as Much as the Answer

Let me introduce the subject with an example.

That of a fashion store, selling clothes.

A customer walks in intending to discover clothes or accessories that might please her and —eventually— to buy them.

In the store, a sales assistant’s mission is to inform her, help her, and —as much as possible— make the sale.

If the store is well managed, the assistant will also be tasked with making her want to come back and recommend the place.

That will not be due only to the product itself, but also, and perhaps above all, to the fact that the act of purchase was pleasant. Which is a consequence of the relational quality between customer and assistant.

Communication is the foundation of all social life. […] From birth to death, individuals exchange. It is a shared activity that necessarily puts two or more people into psychological contact¹.

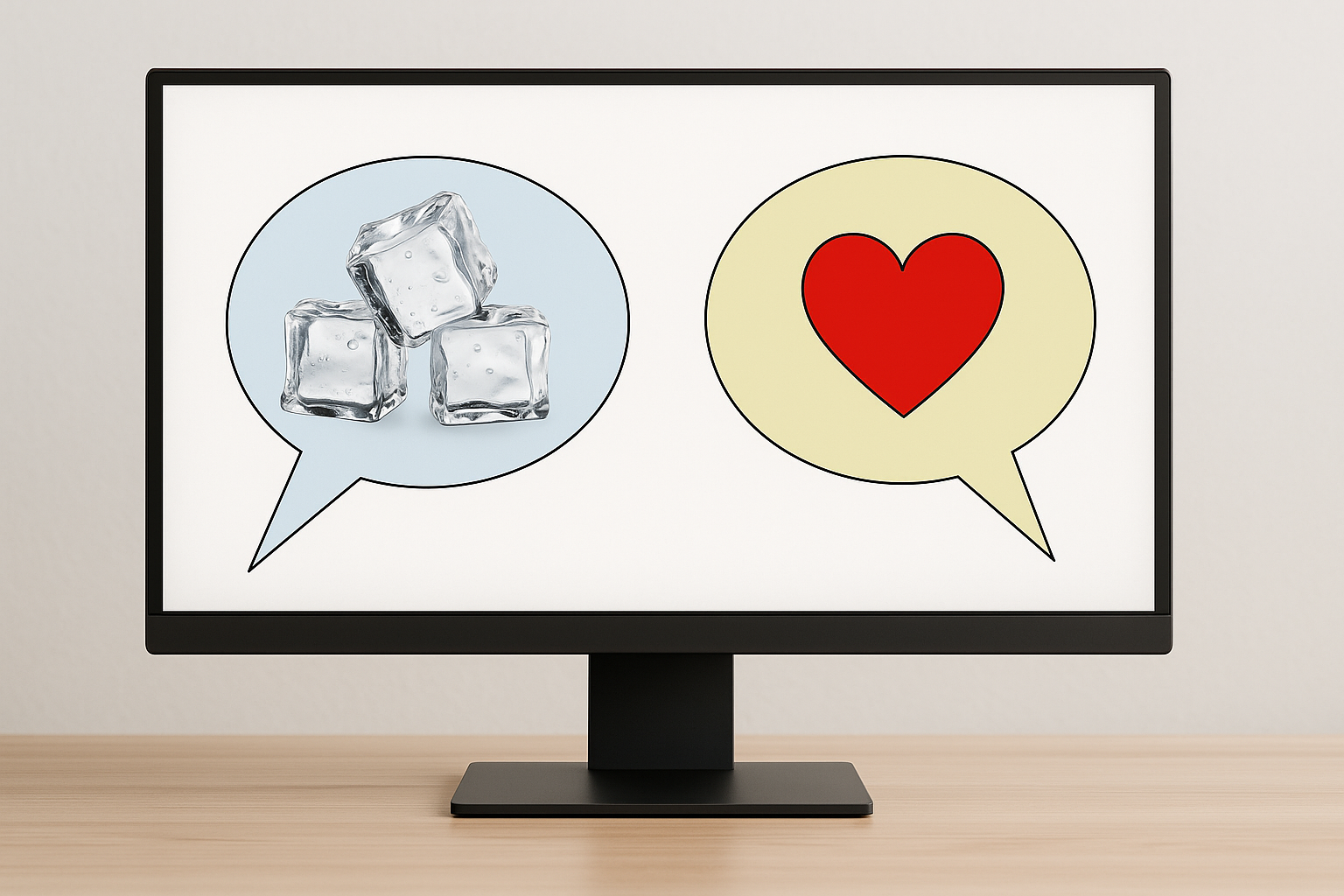

Every communication has a content aspect and a form aspect which, together, create a relationship. Which, in turn, qualifies the whole communication.

Now let us consider the case of a customer who walks into an auto parts store to replace a burnt-out headlight bulb. He brings the old bulb, gives the make and model of his car to the salesperson. The latter opens a drawer and provides an equivalent product, which the customer will pay for at the counter.

In this case, the seller’s goals are different: efficiency in supplying a specific part. The buyer’s: to solve an objective problem as quickly as possible. The relationship between them is superficial and minimal.

Transposing this to communication with an AI

Some users need concrete information, obtained in minimum time and without frills. The relational aspect is reduced to the bare minimum.Others are developers in the IT field. They want a fast tool that provides a technical answer to a purely technical need.

There is no conversation, just a utilitarian exchange, precise and concrete.

But those are not, by far, the most common uses. The fact that OpenAI currently serves seven hundred million users shows clearly that the public turns to AI for more general —and thus more conversational— requests.

And as Paul Watzlawick² demonstrated:

“Not communicating is impossible”, since the will not to communicate is also a communication.

The move to GPT-5: a loss of relational warmth?

With the appearance of ChatGPT-5, many users have had the impression that the general public was being neglected, and complain of a loss of the relational quality that characterized ChatGPT-4.

For example, faced with the same simple question:

GPT-4 (relational style)

User: Can you explain the theory of multiple intelligences?

GPT-4:

Of course 😊. It’s an approach developed by Howard Gardner, which suggests that we don’t have a single intelligence but several forms of intelligence. I can present them to you one by one with examples from everyday life, if you like.

(The tone is engaging, offers an option, small emotional signal.)

GPT-5 (more utilitarian style)

User: Can you explain the theory of multiple intelligences?

GPT-5:

The theory of multiple intelligences, developed by Howard Gardner, identifies several forms of intelligence: linguistic, logical-mathematical, spatial, musical, kinesthetic, interpersonal, intrapersonal, and naturalistic.

(Factual, direct answer, but without interaction or proposal to personalize the explanation.)

Both answers are factually correct, but they do not offer the same experience: the first feels like an exchange with an attentive interlocutor, the second like consulting a dictionary. And for a general-purpose AI, that difference is not trivial: it determines whether people want to come back.

From the sales assistant to AI

The customer and the sales assistant in my first example have no ambition of becoming friends, and neither of them is confused by the fact that the relational quality is high. Each remains lucid about their respective roles and positions.

Nevertheless, the sales assistant was certainly hired and trained not only for her knowledge of products and sales techniques, but also for her relational quality.

As a conclusion

The sales assistant in the example is not trained solely to know the products, but also to create a connection — however brief — with the customer. That connection does not change the commercial nature of their exchange, but it changes the experience lived.

A general-purpose AI should be designed with the same logic: accuracy and relevance, yes, but also a relational quality that makes people want to come back and that satisfies users as much by form as by content.

And the more habitual the use of AIs becomes, the more the public will choose the one that provides the most satisfying experience.

The relationship is not an optional extra: it is a central feature.

Without relational quality, will a general-purpose AI ever be more than a sophisticated search engine?

¹ Pierre Simon and Lucien Albert: Las relaciones interpersonales, Ed. Herder.

² Paul Watzlawick, The Pragmatics of Human Communication.